Foresight scenarios

Foresight scenario is a key methodology for the policy-making and therefore, of great relevance and importance to popAI’s overall objective, namely, to foster trust in the application of AI in the civil security domain. The foresight scenario methodology serves as a platform for diverse stakeholders to come together and discuss, in a structured way, different and even opposing points of view, thereby assessing needs, preferences, and potential risks. Furthermore, the foresight scenarios emerged from the abovementioned activities actively inform and support the design and development of a dedicated Roadmap of AI in Law Enforcement 2040.

In this context, an iterative, collaborative approach to co-produce the scenarios was followed, involving stakeholders in numerous occasions. A major outcome of the foresight scenario methodology adopted is to favour communication and connection between individuals, groups, and organisations with different perspectives and values. The popAI foresight scenario method is informed by the overall project’s positive-sum approach that promotes a consensus among European LEAs and involved stakeholders on AI in policing in developing a “European Common Approach”. Broad acceptance of compliance and a future-focused roadmap aims at solid recommendations to policy makers with strong impact as they hash out an AI Act that ensures AI in policing is human-centred, socially driven, ethical and secure by design.

The foresight scenarios activities delivered five different scenarios, each of them addressing challenges in domains of law enforcement, namely, crime prevention/predicting policing, crime investigation, cyber operations, migration, asylum, and border control, and administration justice. Click on the boxes below to read the full scenario!

Scenario 1

Past will always define future

(Crime prevention/predictive policing)

Scenario 2

AI investigator. Case closed

(Crime investigation)

Scenario 3

Don’t shoot the artist

(Cyber Operations)

Scenario 4

Crossing the invisible borders

(Migration, Asylum, and Border control)

Scenario 5

Guilty till proven innocent

(Administration of justice)

Scenario 1

Past will always define future

Crime prevention/predictive policing

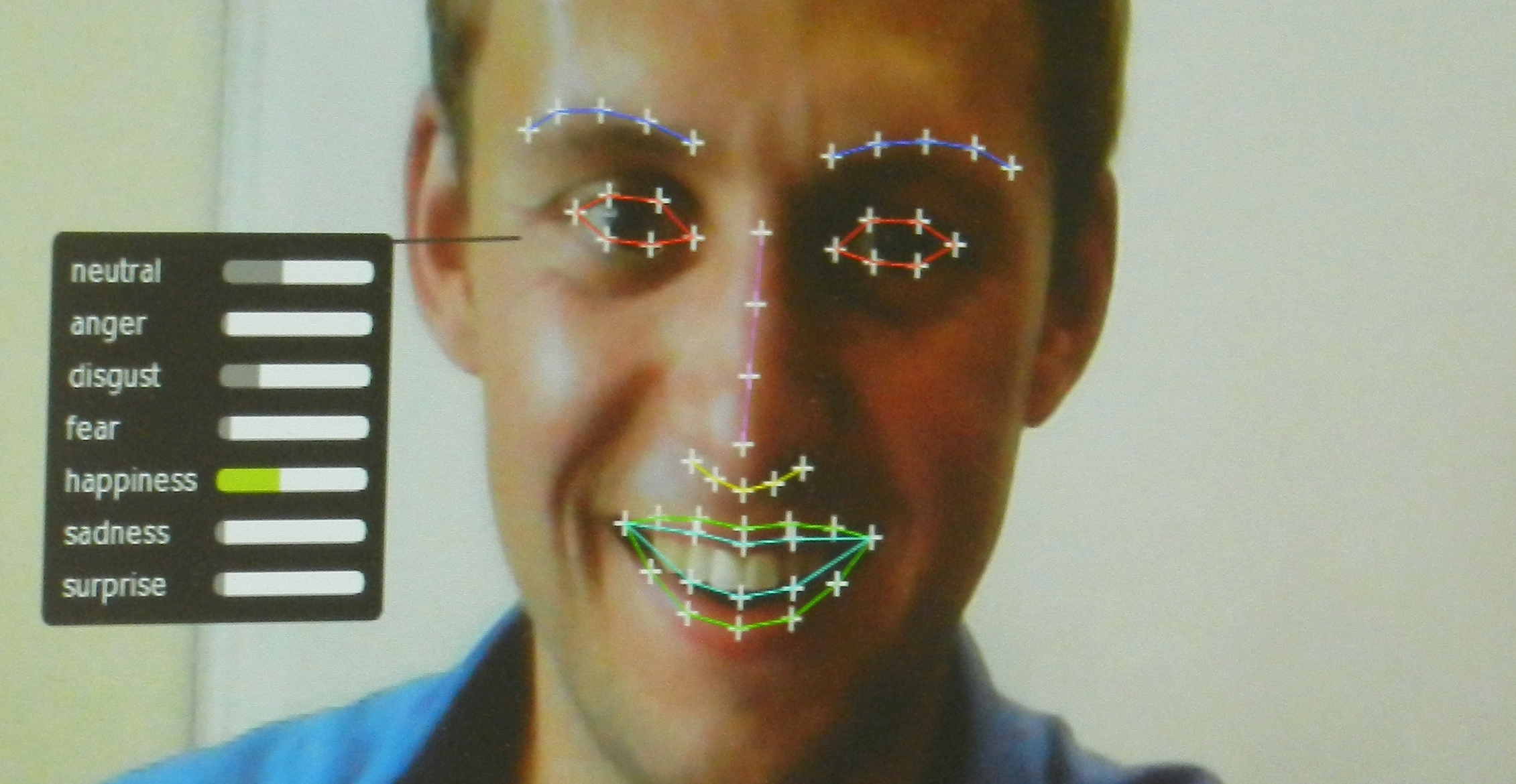

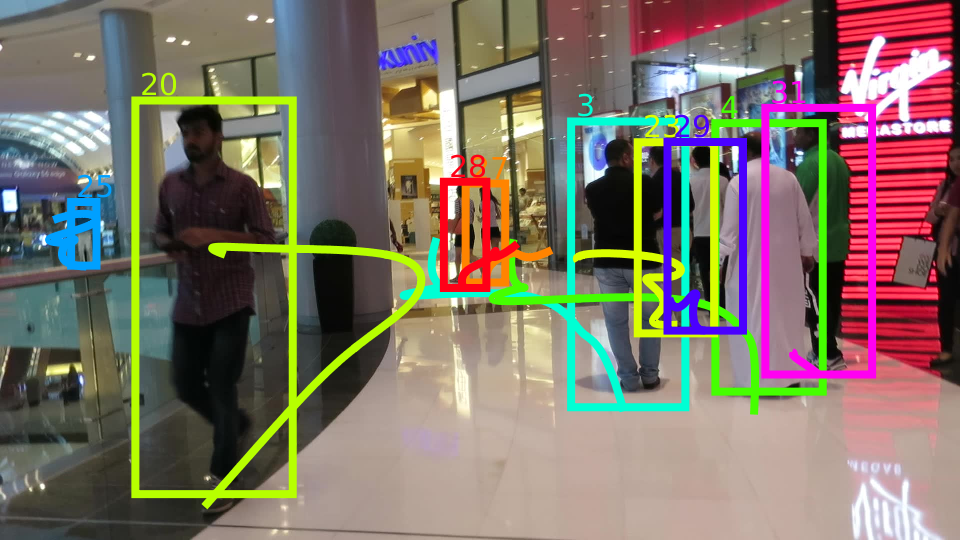

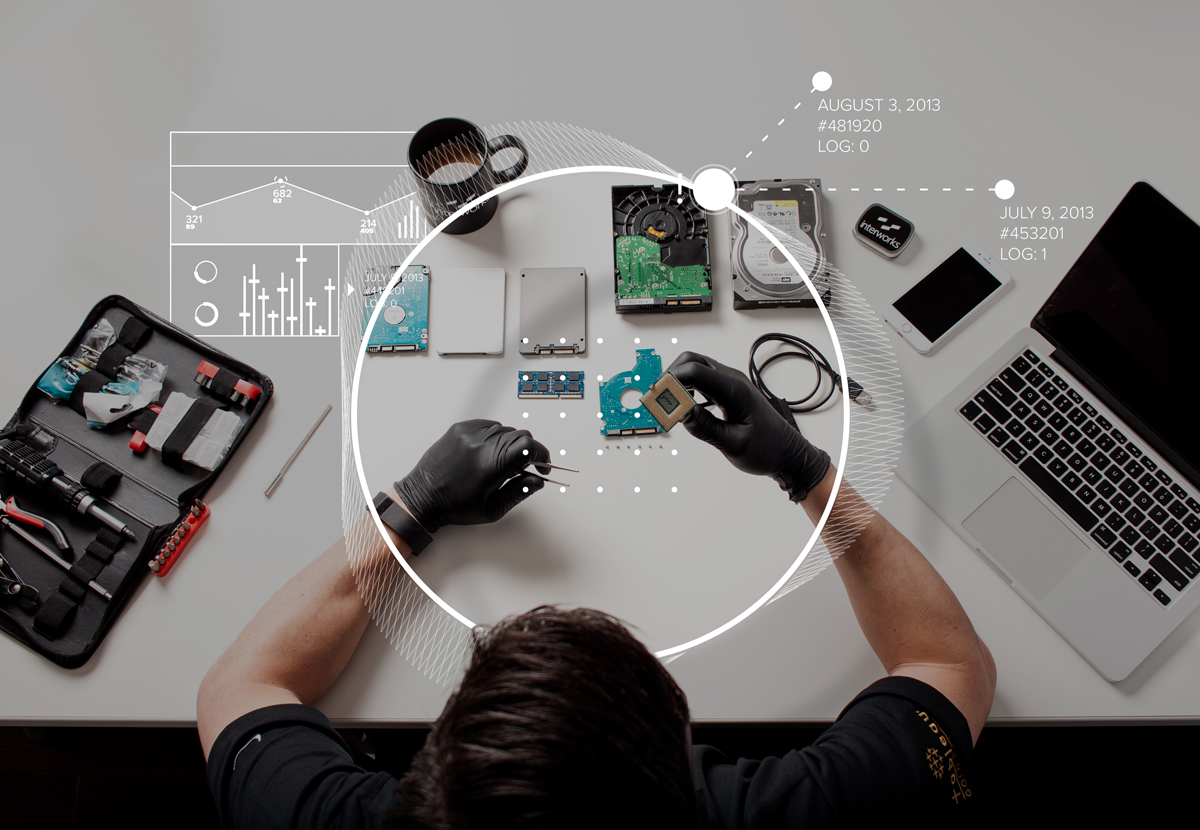

AI algorithms for civil security purposes use police data, combined with other datasets such as demographic, abstracted data from mobile phones, and socio-economic data, as well as data that come from hotspot methods to predict when and where criminal activities are most likely to occur. Interoperability of diverse data sources is authorised in support of crime prevention and community safety. Several local ‘blacklists’ have been created among European Member States that can be linked, compared, and updated in a European level. Based on advanced algorithmic processes, AI-powered surveillance systems are installed in areas flagged as high-risk while drones often circle over.

Federico is an Italian political activist. He has studied chemistry but is unemployed. When he was a teenager Federico was a musician and through his music, he was protesting xenophobia and racism. Due to his beliefs, he was often victim of far-right extremists. He never gave up on his ideas. Last year, Federico visited some family friends in Barcelona with his parents for two weeks. During their stay, his mother was feeling rather weak and therefore they mainly relaxed at their friend’s hotel without visiting tourist attractions. At the same time of the year, in Spain’s capital, there were riots on the streets against austerity. Several people were prosecuted. On their way back to Italy, Federico and his parents were asked a few questions by the airport security staff.

Two weeks after their return in Italy, Federico bought online a ticket for a big concert that didn’t match his music taste. Political figures from the government would also attend this concert. In the same afternoon, Federico joined a telegram group calling for action against European austerity policies. Some of the concert’s technicians were also members of this group as well as left wing extremists.

The night of the concert Federico noticed that a drone was following him. He had already a difficult day. Suspecting his past might be still triggering algorithmic systems to surveil him he gets angry. The sensors in his car record Federico’s tension. The algorithm flags Federico as a high-risk case. The AI-powered system sends a signal to the next available operational unit based on the distance as well as their available equipment, skills, and experience. A police car approaches him a few minutes later and the police officer asks him to follow them to the nearest police station. Federico reacts but complies with the request. Federico is soon released as his case was a false positive. Police officers insert the new data in the system and Federico’s scoring is updated.